It’s no secret that Insanity Radio is working on a visualised radio platform. This post will be the first in a series documenting how we did it.

For context, we’re a very small team of (mostly student) broadcast engineers. We’ve never engineered a telly station before, so this is an entirely new field and we’re practically re-inventing it as we go along.

Oddly, we’ll start at what most will consider the last step: distribution.

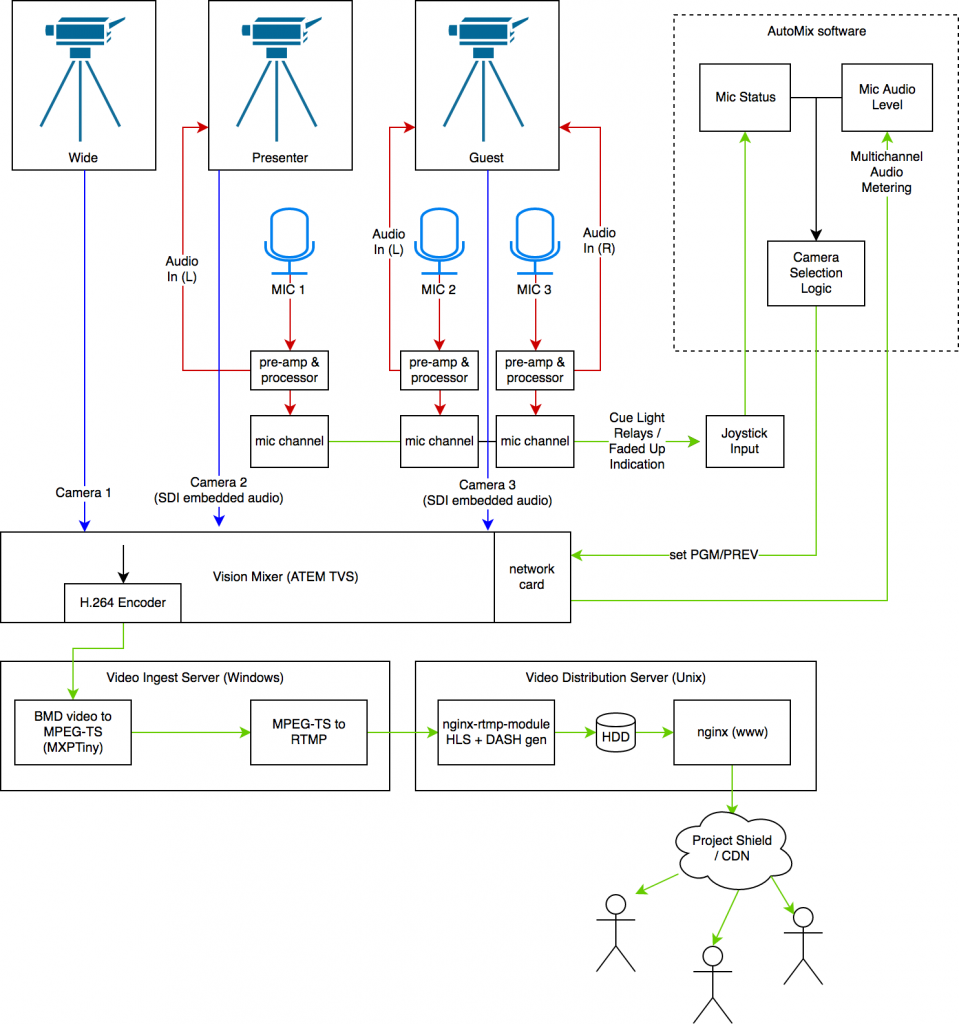

Falcon is built upon a Blackmagic ATEM Television Studio (TVS). The 1080p (HD) version was released a few months after we put in the original purchase orders, which kinda sucked as it limited us to 1080i or 720p. At least we have the built in encoder to work with.

Input to such a device usually would come from a station’s distribution amplifier. In this case, our TVS is our distribution amplifier and runs our main playout facility. Cheap and dirty, but reliable.

When working with online distribution, you can summarise the components in a pretty short list:

- Capturing the programme (“PGM”) video and audio.

- Transcoding this video to suitable format

- Serving this video to users over the Internet

- [Logging this captured video]

Capturing PGM

The ATEM Television Studio has a built in USB H.264 encoder. Great. Encoding H.264, even with modern hardware, is computationally expensive. If we could use this, then we wouldn’t have to worry about re-encoding later in the chain (except to downscale – but that’s not important at this point).

Problem: support for this H.264 encoder is shoddy. Live streaming with it is a bit complicated, and the drivers don’t run on Linux (why? Even Blackmagic support don’t know). As Insanity’s backbone is Linux, this left a bad taste in the mouths of the engineers. Moving through it, the first thing we had to do is install Windows Server on a spare rack server, and then the Blackmagic drivers.

Next problem: “Media Express” can’t stream. We looked at several solutions to this that could stream. We purchased an MX Light license, but discovered that it would crash after being left to free run for over 24 hours. Drat. We needed better reliability.

The solution? A piece of open source software called MXPTiny.

MXPTiny doesn’t have many features, so you have to script it yourself. We did this, but were greeted later in the chain with horrible encoding failures – likely due to a bug in ffmpeg streaming RTMP. This wouldn’t do.

After several days of scratching our heads, we came across another piece of software: Nimble Streamer. Although “freeware”, Nimble works off of a cloud configuration platform called WMSPanel. This software is very expensive ($30/month, which is massive for a community station). Fortunately, a Stack Overflow answer said after initial configuration, if you remove the node from WMSPanel it will continue to operate just fine. Great.

So, that left us with these final configurations:

MXPTiny

Prev. Cfg: C:\path\to\ffmpeg.exe -i \\.\pipe\DeckLink.ts -codec copy -f mpegts "udp://127.0.0.1:30001"

Make sure to lower the video bitrate (<5 Mbps is ideal, otherwise most users will stall). Also, having a high bandwidth causes inconsistent chunk sizes in DASH, which can confuse quite a few players.

Nimble Streamer (via WMSPanel)

Set up a UDP server on localhost:30001

Enable RTMP server for the node

Transcoding

We didn’t need to transcode our video. For us, 1080p25, as captured from the H.264 encoder, was ideal. The less overhead, the better. The solution was easy: serve the .TS files from MXPTiny up to Nimble Streamer, which would instead of transcoding just transmux them into RTMP format.

If you’re looking for a better answer, sorry: we don’t have one.

Serving Video

If you haven’t already, read up on HLS and MPEG-DASH. Insanity’s platform exclusively uses them – the RTMP server isn’t public.

These files were generated using nginx-rtmp-module (the sergey-dryabzhinsky fork). This contained a complete enough DASH implementation to be playable with DASH.JS. Why this and not use Nimble Server? The soonest that we could get back into familiar open source territory the better.

The NGINX server was configured to pull video from our Nimble Server, and to serve falcon’s video in both HLS and DASH formats.

server {

listen 1935;

chunk_size 4000;

application falcon {

deny publish all;

pull rtmp://10.0.69.69:1935/falcon/video name=video static;

allow play all;

live on;

hls on;

hls_path /srv/dash/falcon/hls;

hls_fragment 10s;

hls_playlist_length 1m;

hls_continuous on;

hls_cleanup on;

dash on;

dash_path /srv/dash/falcon/dash;

dash_fragment 10s;

dash_playlist_length 60m;

hls_variant _hi BANDWIDTH=192000;

}

}

Making It Scale

Video serving is expensive – you can easily saturate a gigabit link with ten odd clients. So, CDNs were created: CDNs are great. Most big media companies use Akamai. Most small companies use Cloudflare.

Akamai is expensive. Cloudflare’s terms prohibit you serving lots of video.

The solution? Google’s Project Shield.

Available exclusively to small, independent media groups, this was ideal for us. The best bit is that Shield doesn’t have a SLA that prevents you from serving multimedia elements.

As DASH and HLS both create long (ish) lived segments that are statically served to clients, they are ideal to scale out. The manifest files only update when a new segment is published – every ten seconds.

The edge nodes were configured to cache the manifest files for 10 seconds, and to cache the DASH segments infinitely. As the presentation delay in DASH is set to 60 seconds, this allows plenty of time for the CDN appropriately expire and update manifests if and when necessary.

Initial tests showed that if several people were playing the stream through Shield, most client requests hit its cache. With the power of Google’s infrastructure behind the project, we can hopefully sleep well at night with this platform being able to handle the worst spikes. Wonderful.

Logging

The DASH server is currently configured to store/serve 1 hour of video. We’ll likely up this in the future, but in the mean time this allows us to reconstruct video. As there is no transcoding of DASH segments, this is trivial.

A piece of software (Grabby, soon to be on the Insanity Radio GitHub) is able to pull DASH segments and reconstruct them without much overhead. This works by concatenating the initialisation segment with the segments we want. This is done twice: once for video, once for audio. ffmpeg then joins our two temporary files together to create our final rip. It’s also able to use ffmpeg to forward video to YouTube and Facebook over RTMP.

When used as part of this design chain, there is absolutely no loss in quality from multiple encoding. We’re still using the original H.264 data from the Blackmagic, so video is never re-encoded after capture. This also allows us to run the software on inexpensive, low-end hardware.

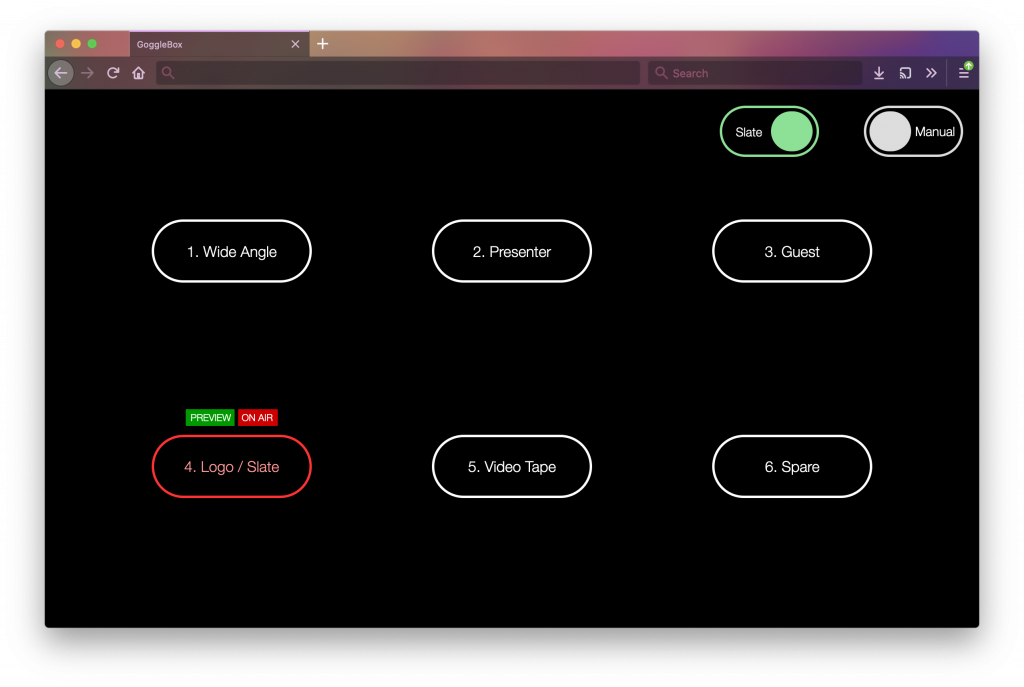

The next post in the series documents how to automate vision mixing